To do user research right, you have to get out of your own head. It’s a systematic process of watching and talking to real people to figure out their behaviors, needs, and motivations. You define clear goals, find the right participants, and then analyze what you learn to make data-driven decisions that actually make a product better.

Why User Research Is Your Product’s Secret Weapon

Doing user research is so much more than checking a box on your project plan. It’s a real commitment to building products that people will actually use—and hopefully, love.

It’s the most reliable way I know to close the gap between what you think your users want and what they genuinely need. Skip this step, and you’re basically flying blind, relying on internal hunches that can lead your product way off course.

This process is the bedrock of solid product decisions. It pulls subjective opinions out of the room and replaces them with real, observable evidence from the people who matter most: your customers. When you invest time in understanding their world, you fuel smarter strategies, happier users, and real business growth.

The Clear Business Impact of Research

Let's be clear: digging into user insights isn't just a feel-good exercise. It delivers a measurable return. It helps your team prioritize features that solve actual problems, which stops you from wasting months of development time on ideas that are destined to fall flat. Understanding user pain points also lets you sand down the rough edges of your product, leading to a much smoother, more intuitive experience.

"Failing to prioritize user research is risky and can lead to poorly designed products and services that are difficult to access."

The evidence is pretty compelling. A big survey of 800 product professionals found that when user research is deeply embedded in development, the business benefits are huge.

An incredible 83% reported enhanced product usability, and 63% saw higher customer satisfaction. On top of that, companies that truly wove research into their core strategy achieved outcomes up to 2.7 times better than their competitors, including a 3.6x lift in active user engagement. You can see more of the data on how user research impacts business goals in Maze's report.

This dedication to understanding your audience is what a strong user experience is all about. When you ground your work in real user data, you make sure every design choice—from the placement of a button to the entire information architecture—serves a clear purpose. If you're looking to connect these dots, our article on what is user experience design is a great place to start.

Ultimately, user research is your secret weapon. It de-risks your roadmap and helps you build a product that actually wins in the market.

Building Your Research Foundation

Great user research doesn't just happen. It doesn’t kick off with the first participant interview or usability test. It starts much earlier, with a rock-solid plan. This is the stage where you get your team on the same page, define what a “win” actually looks like, and build the scaffolding for the entire study.

Honestly, without this foundation, you’re just guessing. You risk ending up with a pile of fuzzy, unactionable data that doesn’t help anyone.

Your first move? Define your research goals with absolute clarity. These goals need to be a direct bridge between the research you're about to do and a real, tangible business or product objective. Don't fall into the trap of starting a study just to "learn about our users." That’s way too vague.

Instead, tie your research to specific questions the team is wrestling with right now. Are you exploring a totally new product idea? Your goal might be to deeply understand the day-to-day workflows of your target audience to spot needs they haven’t even articulated yet. Or maybe you're tweaking an existing feature? In that case, your goal is probably to pinpoint the exact friction points in the current user journey.

From Broad Goals to Sharp Questions

Once you’ve got those high-level goals locked in, it's time to drill down. You need to translate them into specific, answerable research questions. Quick clarification: these are the questions for you and your team, not the ones you'll ask participants directly. Think of them as your North Star, keeping every single part of your study focused and on track.

A good research question is:

- Specific: It zooms in on a particular piece of the user experience.

- Actionable: The answer will directly help your team make a decision.

- Practical: You can realistically find the answer with the time and resources you have.

For instance, a weak research question sounds like, "What do users think of our dashboard?" It's a dead end. A much stronger version would be, "What are the primary tasks users are trying to accomplish on the dashboard, and what specific information do they need to complete them successfully?" See the difference? The second one immediately points you toward what to observe and ask about.

A well-crafted research plan is your most powerful tool for stakeholder alignment. It transforms abstract goals into a concrete roadmap, showing everyone involved what you're trying to learn, why it matters, and how you're going to get there.

This whole planning process ensures every step—from finding participants to analyzing the data—serves a clear purpose. Managing these moving parts efficiently is a core principle of effective creative operations management, making sure your research efforts actually deliver maximum impact. It’s your best defense against scope creep and keeps the team laser-focused on insights that will truly move the needle.

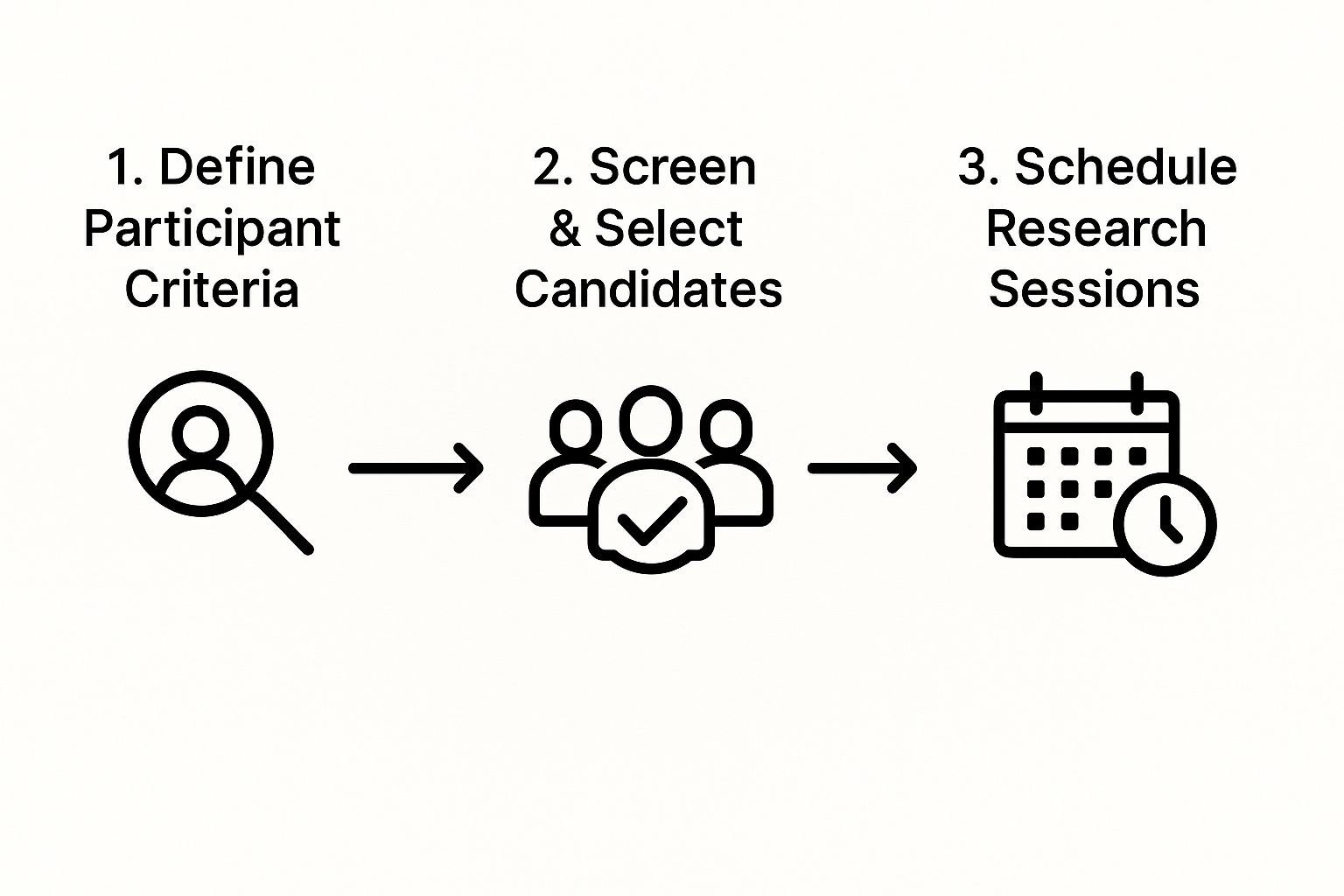

This chart breaks down the next logical steps for finding and prepping your participants once your plan is set.

This visual lays out the recruitment pipeline—defining who you need, screening candidates, and getting them on the calendar. These are the logistical pillars holding up your research foundation.

Choosing Your Research Method

With your goals and questions in hand, you can now confidently pick the right research method. This is a critical choice. Different methods are built to answer different kinds of questions. The two main buckets you'll be choosing from are qualitative and quantitative research.

Qualitative research is all about the "why" behind what people do. It involves direct observation and conversation to gather rich, contextual stories and insights.

- Best for: Exploring problems, understanding motivations, and gathering detailed feedback.

- Common methods: One-on-one interviews, usability testing, and field studies.

Quantitative research focuses on the "what" and "how many." It uses structured data collection from a larger sample size to measure behaviors and attitudes in a way you can count.

- Best for: Validating hypotheses, measuring performance, and spotting trends.

- Common methods: Surveys, A/B tests, and analytics reviews.

In my experience, the real magic happens when you combine them. A mixed-methods study can be incredibly powerful. For example, you might run a survey (quantitative) and find that 70% of users are bailing on the checkout process. Okay, that's alarming, but it doesn't tell you why. So, you follow up with interviews (qualitative) with a handful of those users to hear their stories and see where they got stuck.

Suddenly, you have both the statistical "what" and the narrative "why." This gives your team the complete picture. Getting this foundation right is easily the most critical part of learning how to conduct user research that delivers real value.

Let’s be honest: your research is only as good as the people you talk to.

You can have the most dialed-in research plan on the planet, but if you’re interviewing the wrong folks, you’ll end up with skewed insights and flawed conclusions. Getting this part right is where the magic happens—it’s a mix of art, science, and a little bit of detective work.

Recruiting isn't just about finding warm bodies to fill a Zoom call. It's about pinpointing the exact people whose real-world experiences, habits, and frustrations align with the problem you're trying to solve. Think about it: the "user" for a niche B2B tool for network engineers is a world away from someone grabbing coffee with a mainstream consumer app. Your recruitment strategy has to reflect that difference.

It all circles back to your initial research goals. Before you even think about writing a screener question, revisit your plan. Who are you really building this for? What specific traits define them? Go deep. Look past basic demographics like age and location and get to the good stuff: behaviors, contexts, and motivations.

Crafting a Screener That Actually Works

Your screener survey is your secret weapon for filtering the masses down to your ideal participants. The real goal here is twofold: qualify the right people and, just as crucially, disqualify the wrong ones. You have to do this without tipping your hand about the exact profile you’re hunting for, which prevents people from gaming the system just to snag an incentive.

I like to think of it as a funnel. Your first few questions should be broad to weed out any obvious mismatches right away. From there, each question should get a little more specific, slowly zeroing in on the key behaviors and attitudes you need.

A few hard-won tips for writing effective screeners:

- Keep it neutral. Don't ask leading questions that scream the "right" answer. Instead of, "Are you frustrated with managing your team's tasks?" try something like, "How do you currently manage your team's tasks?"

- Toss in some red herrings. When asking multiple-choice questions about software or brands, add a few plausible but incorrect options. This is a great way to catch people who are just clicking what they think you want to hear.

- Focus on past behavior. What someone did recently is a far more reliable indicator of their habits than what they say they’ll do.

A great screener doesn't just ask who someone is; it asks what they do. Focusing on real, recent behaviors is the fastest way to find participants who can provide authentic, valuable insights.

This is one of those stages where a sharp eye for detail is everything. If your team is new to this, it might be the right time to bring in some help. Our guide on how to hire designers has some great principles that work just as well for finding a top-notch researcher.

Where to Find Your Participants

Okay, your screener is ready to go. Now, where do you find the people to take it? My experience is that casting a wide net across a few different channels gets the best results.

| Recruitment Channel | Pros | Cons |

|---|---|---|

| Your Existing Users | Highly relevant, already engaged with your product. | Can introduce bias; may not represent non-users. |

| Social Media/Communities | Good for finding niche groups (e.g., Reddit, LinkedIn). | Can be time-consuming, quality varies wildly. |

| Recruitment Platforms | Fast, access to a large pool, handles logistics. | Can be expensive, participants may be "professional testers." |

If you're working on a consumer app, targeting relevant subreddits or Facebook groups can be a goldmine. For that specialized B2B tool, you’ll have much better luck tapping into your own customer list or hitting up professional networks on LinkedIn.

Logistics and Ethics Matter

Once you have a pool of qualified candidates, you’re on the home stretch. But don't gloss over the final details: logistics and ethics. This means figuring out fair incentives, handling the scheduling, and getting informed consent.

Your incentive should be fair compensation for someone's time and expertise. A $25 gift card might be perfectly fine for a 30-minute chat about a consumer app. But if you're asking a specialized professional to give you an hour of their time, you should be prepared to offer $100-$200 or more.

Please, save yourself the headache and use a scheduling tool to avoid the endless back-and-forth emails. And finally, before any session kicks off, make sure every single participant understands what the research involves and signs a consent form. It builds trust, and frankly, it's a non-negotiable part of doing ethical, professional research.

Alright, this is where the magic happens. All that legwork in planning and recruiting is about to pay off. It’s time to actually sit down with people and uncover the insights that will genuinely shape your product.

Let's be clear: the goal isn't just to mechanically ask a list of questions. You’re aiming for a guided conversation that reveals the real story behind why a user does what they do.

The quality of the data you collect completely hinges on your ability to make participants feel comfortable and truly heard. A successful session feels less like a formal interrogation and more like a natural, collaborative chat.

Your role as the moderator is to guide, listen, and probe—all while staying as neutral as possible. This is one of the most hands-on parts of the research process, and honestly, it’s a skill you build with pure practice.

Running a User Interview Like a Conversation

Think of a great user interview as a masterclass in active listening. Your main job is to create an environment where the participant feels safe enough to be completely honest with you.

Start by building some rapport. Spend the first few minutes on casual conversation to break the ice before you even think about your research questions. Always remind them that there are no right or wrong answers and that their candid feedback is the most helpful thing they can possibly give you.

Once you get going, let the participant do most of the talking. A good rule of thumb I always follow is the 80/20 rule: they should be speaking 80% of the time, while you only speak 20%. Your questions need to be open-ended, encouraging them to tell stories rather than just giving you a simple "yes" or "no."

For instance, instead of asking, “Do you like this feature?” try something like, “Can you walk me through the last time you used this feature?” This opens the door to rich, contextual details you would’ve completely missed otherwise. When you’re doing these remotely, applying some general virtual interview tips can make a huge difference in the quality of your chats.

The most powerful insights often come from the follow-up question you almost didn't ask. When a participant says something interesting, don't just nod and move on. Gently ask, "Can you tell me more about that?" or "Why was that important to you?"

Mastering the Art of Moderation

Effective moderation is a delicate dance. You need to keep the conversation on track without stifling it. One of the biggest mistakes I see new moderators make is "leading the witness"—phrasing questions in a way that suggests a desired answer. You have to strive for neutrality.

Another common pitfall is jumping in too quickly to fill a silence. I get it, silence can feel awkward, but it often just means the participant is thinking deeply. Give them a moment. More often than not, they’ll break that silence with a gem of an insight.

To keep your sessions consistent and effective, it helps to have a mental checklist of common moderator mistakes to avoid:

- Asking leading questions: "Don't you think this button is confusing?"

- Answering questions for the user: If they ask, "What should I do here?" reply with, "What would you expect to do?"

- Rushing the participant: Let their story unfold at its own pace.

- Correcting the user: Never, ever say, "You're using it wrong." Your goal is to understand their mental model, not teach them yours.

These skills are universal. They apply across different research methods, from one-on-one interviews to more formal usability tests where you're observing a user interacting with a prototype.

Beyond Interviews: Other Essential Methods

While interviews are fantastic for digging into the "why," other methods are essential for gathering different types of insights. Crafting an effective survey, for example, lets you collect quantitative data at scale. It’s perfect for validating patterns you think you've noticed in your qualitative sessions.

Usability testing is another critical tool in the shed. Here, the focus shifts from conversation to pure observation. You give a participant specific tasks to complete with your product or prototype and just watch—see where they succeed and, more importantly, where they struggle. This method is second to none for pinpointing specific friction points in your UI.

Thankfully, the process of collecting and making sense of all this data has gotten a lot easier with modern tools. This is reflected in the wild expansion of the global user research software market, which was valued at USD 245.46 million in 2024 and is projected to hit a whopping USD 719.94 million by 2033. This growth just shows how dedicated platforms are becoming the standard for systematically collecting, analyzing, and acting on user feedback.

Whether you're running interviews, launching a survey, or moderating a usability test, the core principle is always the same: approach every single interaction with genuine curiosity. Your job is to step into your user's world and see your product through their eyes.

Alright, you've done the interviews, run the surveys, and watched the session recordings. The data is officially collected. Now what?

This next part is where the magic really happens. It’s the moment you transform that mountain of raw notes, numbers, and observations into a clear, compelling strategy your team can actually get behind and build from.

It can feel a little overwhelming staring at everything you’ve gathered. But really, you’re just looking for the story hidden inside the data. The goal is to move from a jumble of individual comments to a cohesive narrative that explains what’s happening, why it’s a big deal, and exactly what to do next. Skip this, and all that hard work risks becoming just an interesting folder of facts no one ever looks at again.

Making Sense of Qualitative Data

Let’s start with the rich, messy stuff from your interviews and usability tests. Your first job is to hunt for the patterns. One of the best, most hands-on ways to do this is with affinity mapping (sometimes called affinity diagramming). It’s a surprisingly low-tech but powerful method that helps you organize all those insights visually.

Picture this: every key observation, standout quote, or user pain point gets its own sticky note.

-

Pull Out the Good Stuff: Go through all your notes and recordings. Every time you find an interesting insight, a direct quote that hits hard, or a moment of friction, write it down on a single sticky note. Don't filter yourself yet—just get it all out.

-

Group 'Em Up: Get your team in a room with a big wall or whiteboard. Without talking, everyone starts moving the sticky notes around, placing related ideas next to each other. You'll quickly see natural clusters and themes begin to form on their own.

-

Give the Groups a Name: Once the dust settles and the groups look solid, talk them over as a team. Give each cluster a name that sums up its central idea. This is where your high-level insights start taking shape.

For instance, you might end up with a cluster of notes that say, "Couldn't find the save button," "Wasn't sure if my work was saved automatically," and "I kept looking for a confirmation message." Boom. You can label that whole group "Uncertainty Around Saving Progress." Just like that, you’ve got a named user problem backed by direct evidence.

Affinity mapping is how you turn chaos into clarity. It’s a bottom-up approach that forces the themes to emerge directly from what users told you, which helps minimize your own bias and gets the whole team on the same page.

This process keeps you honest. It stops you from just cherry-picking the data that confirms what you already thought and forces you to listen to what the patterns are really telling you.

Interpreting Your Quantitative Data

While your qualitative data gives you the rich "why," the quantitative stuff from surveys and analytics tells you the "what" and "how many." It gives you scale. When you dig into this data, you're looking for trends that are statistically significant, not just numbers that look interesting at a glance.

Don't just stop at the summary percentages in your survey results. You have to slice the data to find the real story. Sure, finding that 60% of users are satisfied with a feature is good to know. But what if you discover that 90% of new users are satisfied, while only 30% of your power users are? That's a much more specific, and frankly, more interesting problem to solve.

A few key metrics to keep an eye on:

- Task Success Rate: What percentage of people actually completed the task you gave them? If this number is low for a core feature, you've got a serious problem.

- Time on Task: How long did it take? Unusually long times are a classic sign of friction and confusion.

- Error Rate: How many times did people mess up? This helps you zero in on the most confusing parts of your UI.

- Satisfaction Scores (CSAT, SUS, etc.): How did users actually rate the experience? This gives you a baseline you can track over time.

Having these hard numbers to back up the stories you uncovered in your interviews makes your final report incredibly persuasive.

Choosing the Right User Research Method

Deciding on the right method from the start is key. Your choice depends on what you need to learn, how much time you have, and the resources at your disposal. This table breaks down some common methods to help you pick the right tool for the job.

| Method | Best For | Data Type | Typical Sample Size |

|---|---|---|---|

| User Interviews | Understanding deep motivations, behaviors, and "why" behind actions. | Qualitative | 5-10 participants |

| Usability Testing | Identifying pain points and friction in a specific user flow or interface. | Qualitative/Quantitative | 5-8 participants per user group |

| Surveys | Measuring attitudes and collecting feedback from a large user base. | Quantitative | 100+ respondents |

| A/B Testing | Comparing two versions of a design to see which one performs better. | Quantitative | 1,000+ users per variation |

| Card Sorting | Figuring out how users mentally group content to inform your IA. | Qualitative | 15-20 participants |

Each method offers a unique lens into your user's world. Often, the most powerful insights come from combining a few of them—like pairing the "why" from interviews with the "what" from a survey.

Weaving Your Findings into a Compelling Narrative

The final step is to bring it all together. A great research report isn't a data dump—it's a story that connects user needs directly to business goals.

Structure your report around key themes, not the methods you used. So, instead of a chapter on "Interview Findings" and another on "Survey Findings," create sections like "Users Struggle with Initial Onboarding." Then, back that theme up with powerful quotes from your interviews and the survey data showing high drop-off rates in the first week.

This whole process—from research to analysis and synthesis—is a critical piece of the puzzle. To see how these user insights plug into the bigger picture, check out our guide on the essential design process steps.

Ultimately, your recommendations need to be concrete, actionable, and tied directly to the evidence you’ve laid out. Getting this right is more important than ever. The global UX market is forecasted to explode from USD 3.5 billion in 2023 to nearly USD 9.8 billion by 2033. That growth is happening for a reason: companies know that deeply understanding users is fundamental to success. You can read more about the UX market's expansion to see just how big this trend is. By turning raw data into a clear strategy, you’re setting your organization up to be a part of that successful future.

Common User Research Questions Answered

Once teams start getting their hands dirty with user research, a few familiar questions and roadblocks always seem to surface. It’s just a natural part of the learning curve.

Knowing how to handle these hurdles can mean the difference between a research practice that takes off and one that just stalls out. Let’s jump into some of the most frequent questions I hear.

How Can We Do This With No Budget?

This is the big one. I hear it all the time, especially from startups and smaller teams. The good news? You absolutely don't need a massive budget to get powerful insights. Some of the most game-changing research is born from being scrappy.

Your existing network is your best friend here. Start by talking to your current customers. They have the most relevant feedback and are often happy to chat for a simple thank-you or a small, non-monetary perk.

You can also find potential participants in online communities on Reddit or LinkedIn where your audience hangs out. It takes a bit more legwork, but it's completely free.

As for tools, there are plenty of free and low-cost options for everything from scheduling to video calls to surveys. The secret is to focus on the quality of your conversations, not the price tag of your software.

How Many Users Do We Need to Talk To?

There’s no magic number here. The right answer depends entirely on what you’re trying to learn and the method you're using. For qualitative stuff like one-on-one interviews or usability testing, you'll be surprised how much you can learn from just a handful of people.

A well-known rule of thumb is that you'll uncover about 85% of the usability issues in an interface after testing with just five users. After that, you start hearing the same things over and over again, and your return on time drops off.

Now, for quantitative methods like surveys, you're playing a different game. You’ll need a much larger sample size to get statistically significant data—often 100 participants or more. The key is just to match your sample size to your research goals.

How Do We Get Stakeholder Buy-In?

This is crucial. You can't build a real research culture without leadership on your side. Stakeholders, especially those watching the bottom line, need to see how research connects directly to business outcomes.

The trick is to frame research not as a cost, but as a risk-reduction strategy.

Instead of just asking for money, present a clear plan. Show exactly how user insights will help the team avoid building the wrong thing, focus on features that actually matter, and ultimately save a ton of time and money. Share past wins, no matter how small, to prove the value of listening to users.

Honestly, getting this support often feels like you're pitching an investor. You have to tell a compelling story backed by solid evidence. If you're looking for tips on making your case, our guide on how to pitch investors has some great principles that work surprisingly well here, too.

How Do We Know If Our Research Is Any Good?

Ah, the "is this actually legit?" question. It's a big one. How can you feel confident in your findings? Unlike slow-moving academic research, industry research has to be fast and impactful. But that doesn't mean you sacrifice quality.

Good research is simply research that helps your team make better decisions. Period.

Here are a few signs you're on the right track:

- You had a clear plan: You started with specific goals and questions.

- You found the right people: You talked to users who actually represent your target audience.

- Your methods were unbiased: You worked hard to avoid leading questions or influencing answers.

- Your findings were actionable: Your insights led to real, concrete next steps for the team.

Ultimately, the best measure of your research is its impact. Did it change the conversation in the room? Did it give the team the confidence to move forward? If the answer is yes, you're doing it right.